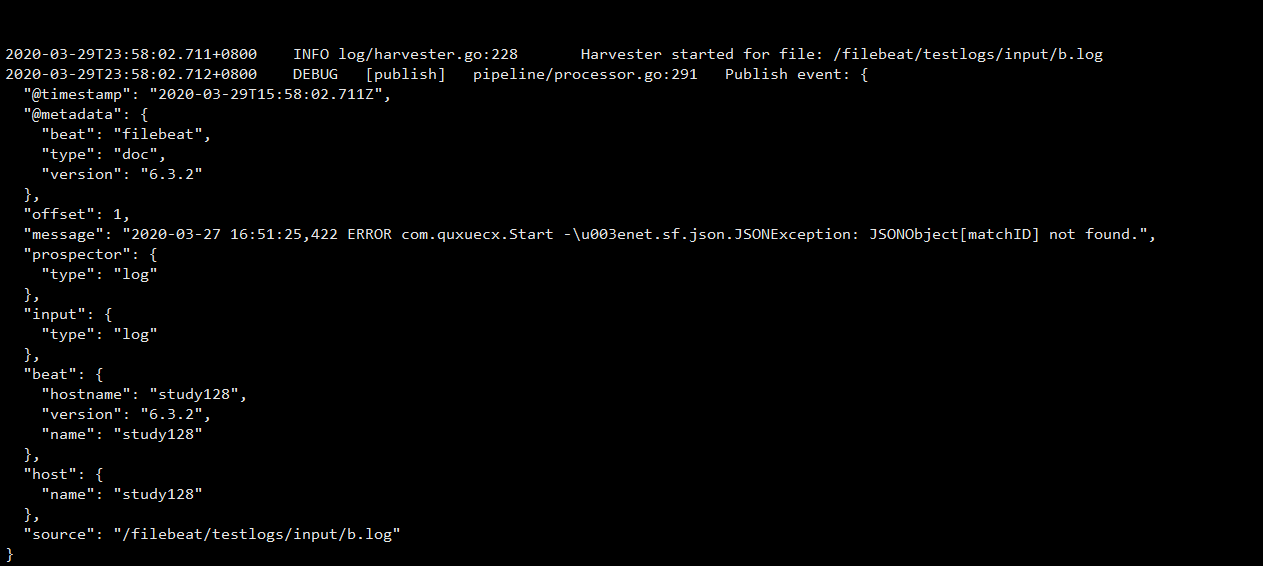

If something goes wrong when POSTing the data, we tell filebeat it should retry the events that make up this batch. If we succeed, we observe the number of events we’ve successfully processed and use batch.Ack() to notify filebeat that everything went fine. Once we’ve got all of our entries, we serialize them into JSON and attempt to POST them to our configured endpoint. Iterating through the events, we build our log entry structs (more on that in a minute). Given that we are building an HTTP output, we also need a way to tell the client where it should post log event data to.Įach of these is made configurable by our struct below using tags that filebeat understands: For each log event, it will also retry publishing a certain number of times in the face of failure. As for what we might want to make configurable, there’s a bit to unpack there.įilebeat supports publishing log events to a number of clients concurrently, each receiving events in batches of a configurable size. The common.Config argument represents our output’s configuration which we can unpack into a custom struct. Of those arguments, the two we’re going to focus on are the outputs.Observer and the common.Config.Īn outputs.Observer tracks statistics about how our clients are fairing, which we will dive more into later.

For the factory method, we take in some arguments and return the result of a function wrapping a slice of outputs.NetworkClient objects. We register our custom output under the name http and associate that with a factory method for creating that output.

Using the Elasticsearch output plugin as an example, we can infer the initial skeleton for our own custom output: Essentially, all of the bundled outputs are just plugins themselves.

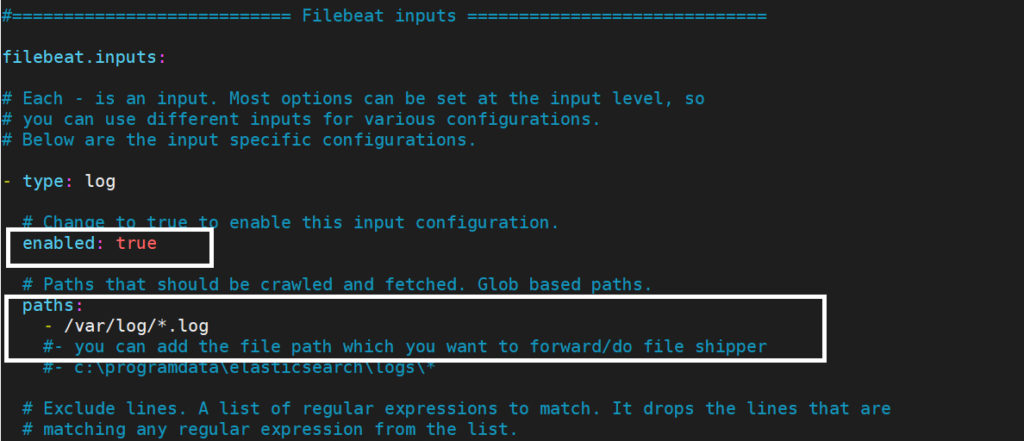

Filebeats settings code#

Because of this, and how well organized the filebeat code actually is, it’s pretty easy to reverse-engineer how we might go about writing our own plugin.įor instance, we know from the documentation that filebeat supports an Elasticsearch output, and a quick grep of the code base reveals how that output is defined. The main thing you need to know when writing a custom plugin is that filebeat is really just a collection of Go packages built on libbeat, which itself is just the underlying set of shared libraries making up the beats open source project. It supports a variety of these inputs and outputs, but generally it is a piece of the ELK (Elasticsearch, Logstash, Kibana) stack, tailing log files on your VMs and shipping them either straight into ElasticSearch or to a Logstash server for further processing. Like other tools in the space, it essentially takes incoming data from a set of inputs and “ships” them to a single output. What is Filebeat?įilebeat is an open source tool provided by the team at and describes itself as a “lightweight shipper for logs”. Today, we’re going to walk through how you, too, can write your own output plugin for filebeat using the Go programming language. The catch? Filebeat did not support our destination out of the box, so we were left with only one choice: build it ourselves. At the front of this pipeline is Filebeat, a tool we use to process and ship this data to our log storage and query service.

At FullStory, our services produce hundreds of thousands of log lines a second.

0 kommentar(er)

0 kommentar(er)